Want to fool a computer vision system? Just tweak some colors

Research into machine learning and the interesting AI models created as a consequence are popular topics these days. But there’s a sort of shadow world of scientists working to undermine these systems — not to show they’re worthless but to shore up their weaknesses. A new paper demonstrates this by showing how vulnerable image recognition models are to the simplest color manipulations of the pictures they’re meant to identify.

It’s not some deep indictment of computer vision — techniques to “beat” image recognition systems might just as easily be characterized as situations in which they perform particularly poorly. Sometimes this is something surprisingly simple: rotating an image, for example, or adding a crazy sticker. Unless a system has been trained specifically on a given manipulation or has orders to check common variations like that, it’s pretty much just going to fail.

In this case it’s research from the University of Washington led by grad student Hossein Hosseini. Their “adversarial” imagery was similarly simple: switch up the colors.

Probably many of you have tried something similar to this when fiddling around in an image manipulation program: by changing the “hue” and “saturation” values on a picture, you can make someone have green skin, a banana appear blue and so on. That’s exactly what the researchers did: twiddled the knobs so a dog looked a bit yellow, a deer looked purplish, etc.

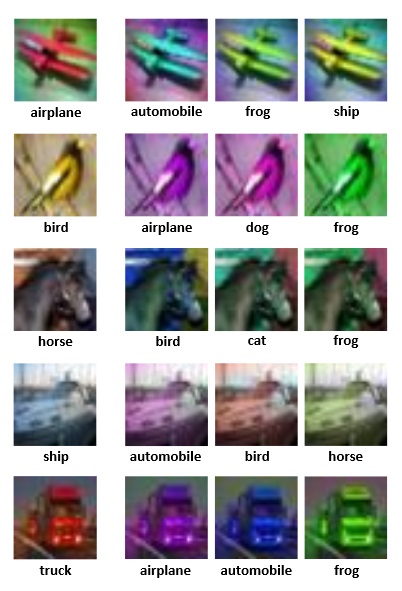

The original images are at left; color-shifted versions and the systems’ best guesses at right.

Critically, however, the “value” of the pixels, meaning how light or dark it is, wasn’t changed, meaning the images still look like what they are — just in weird colors.

But while a cat looks like a cat no matter if it’s grey or pink to us, one can’t really say the same for a deep neural network. The accuracy of the model they tested was reduced by 90 percent on sets of color-tweaked images that it would normally identify easily. Its best guesses are pretty random, as you can see in the figure at right. Changing the colors totally changes the system’s guess.

The team tested several models and they all broke down on the color-shifted set, so it wasn’t just a consequence of this specific system.

It’s not too hard to fix — in this case, all you really need to do is add some labeled, color-shifted images into the training data so the system is exposed to them beforehand. This addition brought success rates back up to reasonable (if still fairly poor) levels.

But the point isn’t that computer vision systems are fundamentally bad at color or something. It’s that there are lots of ways of subtly or not-so-subtly manipulating an image or video that will devastate its accuracy or subvert it.

“Deep networks are very good at learning (or better memorizing) the distribution of training data,” wrote Hosseini in an email to TechCrunch. “They, however, hardly generalize beyond that. So, even if models are trained with augmented data, it’s likely that we can come up with a new type of adversarial images that can fool the model.”

A model trained to catch color variations might still be vulnerable to attention-based adversarial images and vice versa. The way these systems are created and encoded right now simply isn’t robust enough to prevent such attacks. But by cataloguing them and devising improvements that protect against some but not all, we can advance the state of the art.

“I think we need to find a way for the model to learn the concepts, such as being invariant to color or rotation,” Hosseini suggested. “That can save the algorithm a lot of training data and is more similar to how humans learn.”

You can read the full pre-print paper on Arxiv (PDF).